Navie Bayes and Decision Tree Which One Gives Best Results

Compare the results between your Decision Tree and the Naive Bayes algorithm. These are the results of a classification problem using decision tree naive bayes and 1-nearest-neighbor as classifiers.

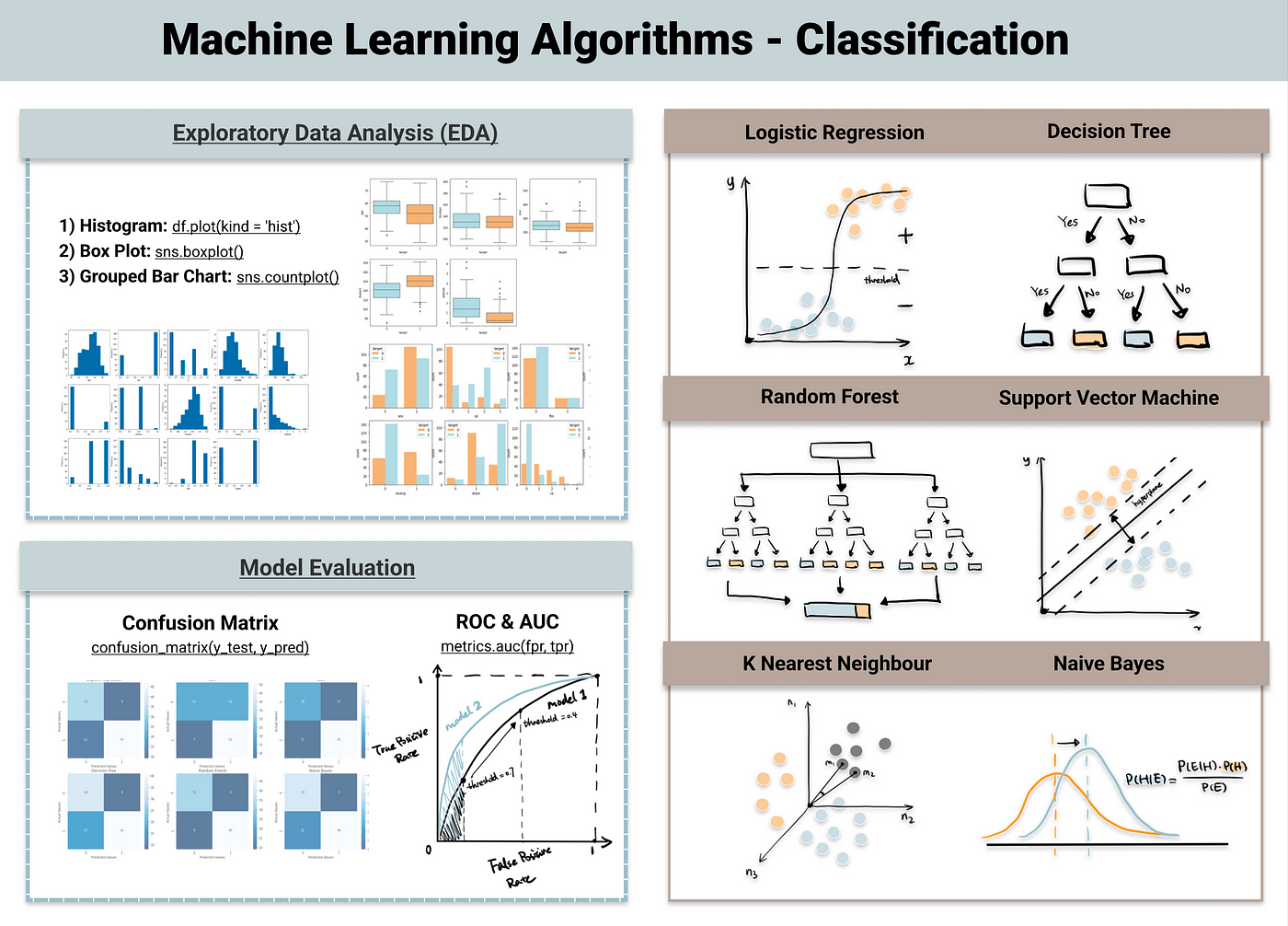

Classification Algorithms Random Forest And Naive Bayes

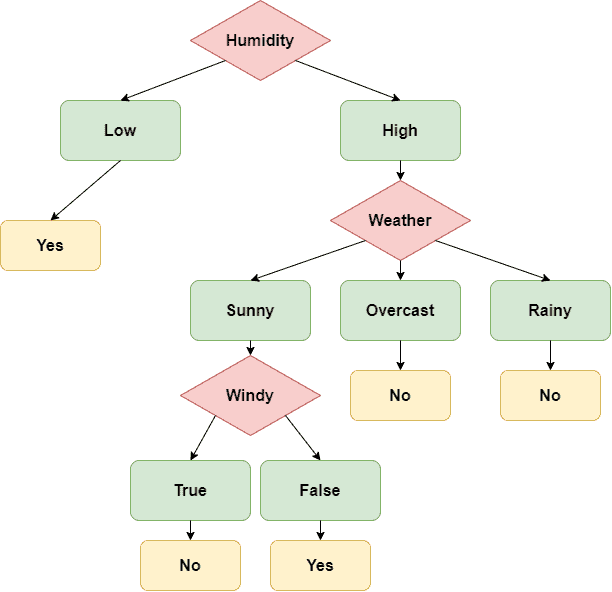

Decision trees are easy to use for small amounts of classes.

. KNN is insensitive to outliers decision tree is good at dealing with irrelevant features Naïve Bayes is good for handling multiple classes and. Naive Bayes is used a lot in robotics and computer vision and does quite well with those tasks. It outperforms Decision Tree and k-Nearest Neighbor on all parameters but precision.

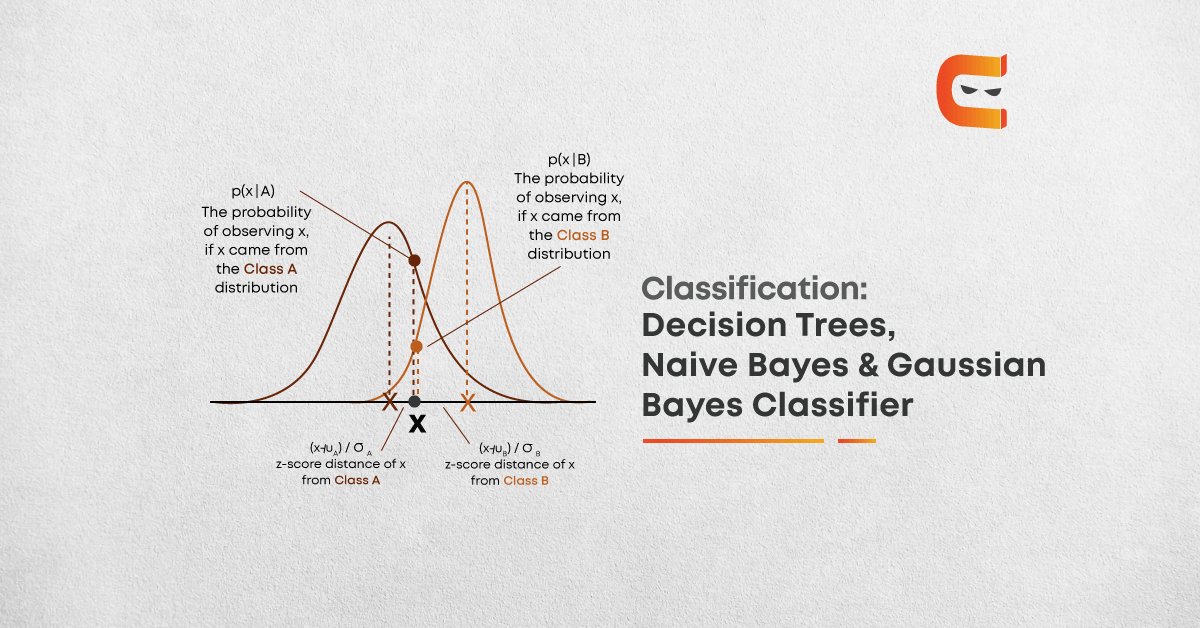

Table 5 describes the datasets used in experimental analysis. The performances of both of the proposed hybrid decision tree and naïve Bayes algorithms are tested on 10 real benchmark datasets from UCI machine learning repository Frank Asuncion 2010. Naive Bayes classifiers are a collection of classification algorithms based on Bayes Theorem.

As can be seen in Table 1 the Decision Trees model gives better average values ie better accuracy for predicting true positives and true negatives as compared to the Naïve Bayes model. Decision tree vs neural network. PCC s relative to the normal and abnormal connections testing set Decision tree 9999 9999 9999 9302 9355 9252 Naive Bayes 9871 9931 9924 9145 9269 9240 training set As with previous experimentations Table 4 shows that the gap between decision trees and naive Bayes is insignificant since the PCC is almost.

It is based on application of Bayes theorem with naive independence assumptions. J48 is used C45 decision tree for classification which creates a binary tree. In order to evaluate the performance of naive Bayes we compare based on same data results given by naive Bayes to those of decision trees 12 considered as one of the well known learning methods.

Which is more accurate7. The same query when used with Decision tree model gives different results for different inputs as well as seemingly correct. Experimental results on a variety of natural domains indicate that Self-adaptive NBTree has clear advantages with respect to the generalization ability.

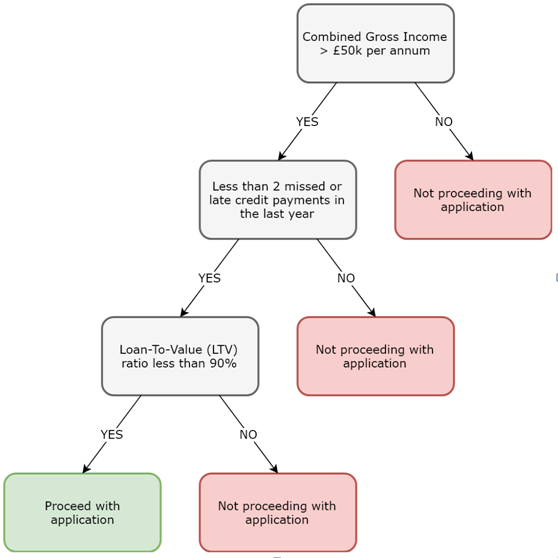

This limits the applicability of this algorithm in real-world use cases. It is based on the Bayes Theorem which assumes that there is no interdependence amongst the variables. Decision tree pruning may neglect some key values in training data which can lead the accuracy for a toss.

For example if a fruit is banana and it has to be yellowgreen in colour in the shape of a banana and 1-2cm in radius. We show that naive Bayes are re-ally very competitive and the performance difference with respect to decision trees is not significant. Previous article Next article Keywords Self-adaptive NBTree.

Building a Decision Tree Greedy algorithm. Sort the training data into the sub-regions. Naive Bayes and K-NN are both examples of supervised learning where the data comes already labeled.

To start with let us consider a dataset. If youre trying to decide between the three your best option is to take all three for a test drive on your data and see which produces the best results. Feature to split on value at which to split it 2.

It is the most popular choice for text classification problems. Naive Bayesian algorithm is a simple classification algorithm which uses probability of the events for its purpose. Naive Bayes assumes that all predictors or features are independent rarely happening in real life.

Paste summary and plots into a Microsoft Word document or Excel or Jupyter notebook and create a pdf or html file so I dont have to compile your code to get an answerData is attached below in zip file. Decision trees work better with lots of data compared to Naive Bayes. Naive Bayes classifier algorithm gives the best type of results as desired compared to other algorithms like classification algorithms like Logistic Regression Tree-Based Algorithms Support Vector Machines.

Decision tree is a discriminative model whereas Naive bayes is a generative model. They concluded that Naive Bayes outperformed Decision Tree and KNN in terms of accuracy. Naive bayes does quite well when the training data doesnt contain all possibilities so it can be very good with low amounts of data.

For increasing the accuracy of this. The differences between classification time of Decision Tree and Naïve Bayes also between Naïve Bayes and k-NN are about an order of magnitude. It performs well in Multi-class predictions as compared to the other Algorithms.

For example SVM is good at handling missing data. Note that Naive Bayes does not support continuous columns while Decision Trees does support them. Elev a elev b elev c e f g SF NY SF NY SF elev d SF NY SF a e b c gf d.

Every pair of features being classified is independent of each other. The standard deviation formula takes into account the samples. Recursively build decision trees for the sub-regions.

Hence it is preferred in applications like spam filters and sentiment analysis that involves text. Advantages of Naïve Bayes Classifier. Naive Bayes is better suited for categorical input variables than numerical variables.

There are 10000 data objects and these results were validated using 10-fold cross validation. Decision trees are more flexible and easy. It can be used for Binary as well as Multi-class Classifications.

It is not a single algorithm but a family of algorithms where all of them share a common principle ie. Each dataset is roughly equivalent to a two-dimensional spreadsheet or a database table. On the other hand the Naive Bayes models standard deviation values are smaller which means the models prediction doesnt get affected by data change ie robust model.

Within a region pick the best. Naïve Bayes is one of the fast and easy ML algorithms to predict a class of datasets. It is likely that bad model will be able to give good results.

Both finds non-linear solutions and have interaction between independent variables. The Naive Bayes node helps to solve overgeneralization and overspecialization problems which are often seen in decision tree. Decision trees are constructs using greedy technique and it uses reduced error pruning.

Only one column age was being used as continuous rest all were being used as discrete. It really gives good results. Support Vector Machine SVM algorithm is based on statistical learning theory.

N sq. Based on Percision Recall F-measure Accuracy and AUC the performance of Naïve Bayes is the best.

Pdf Comparison Of Decision Tree And Naive Bayes Methods In Classification Of Researcher S Cognitive Styles In Academic Environment Semantic Scholar

Pdf Comparative Study Of K Nn Naive Bayes And Decision Tree Classification Techniques Semantic Scholar

Decision Tree And Naive Bayes Algorithm For Classification And Generation Of Actionable Knowledge For Direct Marketing

Comparing Classifiers Decision Trees K Nn Naive Bayes Datasciencecentral Com

Flowchart Of Naive Bayes Decision Tree Algorithm Download Scientific Diagram

Decision Tree Vs Naive Bayes Classifier Baeldung On Computer Science

Exploring A Decision Tree From The Root To The Leaves Python Machine Learning By Example Third Edition

Machine Learning How Good Can Nearest Neighbor Naive Bayes And A Decision Tree Classifier Solve The Given Classification Problem Stack Overflow

Pdf Performance Comparison Between Naive Bayes Decision Tree And K Nearest Neighbor In Searching Alternative Design In An Energy Simulation Tool

Comparison Between Baseline Naive Bayes J48 Decision Tree Random Download Scientific Diagram

Flowchart Of Naive Bayes Decision Tree Algorithm Download Scientific Diagram

Pdf Performance Comparison Between Naive Bayes Decision Tree And K Nearest Neighbor In Searching Alternative Design In An Energy Simulation Tool

Percentage Classification Accuracy For Decision Tree Naive Bayes Download Scientific Diagram

Classification Decision Trees Naive Bayes Gaussian Bayes Classifier Coding Ninjas Blog

Classification Times Of K Nn Naive Bayes And Decision Tree Download Scientific Diagram

Top 6 Machine Learning Algorithms For Classification By Destin Gong Feb 2022 Towards Data Science

Pdf Comparative Study Of K Nn Naive Bayes And Decision Tree Classification Techniques Semantic Scholar

Pdf Comparative Study Of K Nn Naive Bayes And Decision Tree Classification Techniques Semantic Scholar

Pdf Comparison Of Decision Tree And Naive Bayes Methods In Classification Of Researcher S Cognitive Styles In Academic Environment Semantic Scholar

Comments

Post a Comment